How Changing One Law Could Protect Kids From Social Media

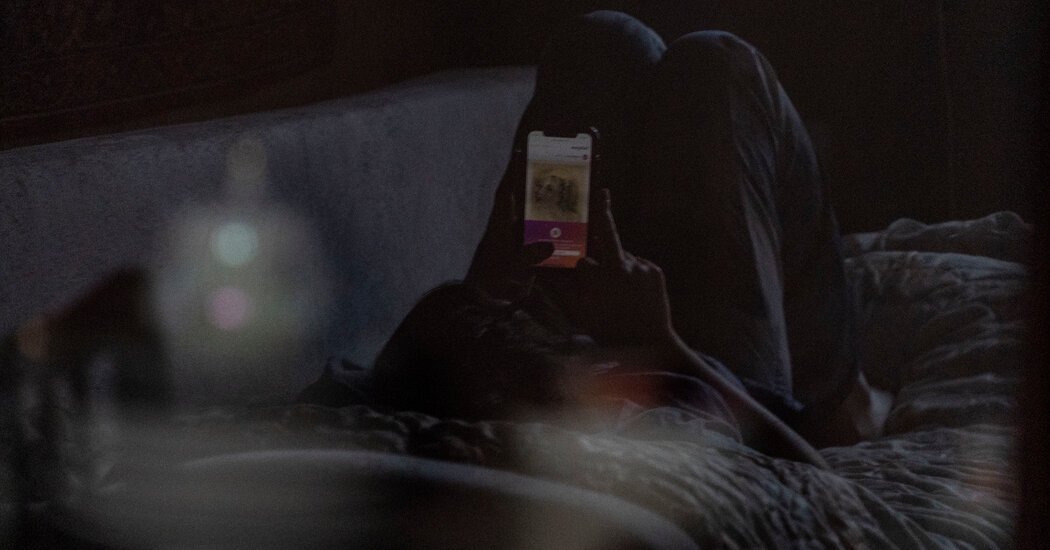

Parenthood has always been fraught with worry and guilt, but parents in the age of social media have increasingly confronted a distinctly acute kind of powerlessness. Their kids are unwitting subjects in a remarkable experiment in human social forms, building habits and relationships in an unruly environment designed mostly to maximize intense engagement in the service of advertisers.

It’s not that social media has no redeeming value, but on the whole it is no place for kids. If Instagram or TikTok were brick-and-mortar spaces in your neighborhood, you probably would never let even your teenager go to them alone. Parents should have the same say over their children’s presence in these virtual spaces.

We may have the vague impression that that would be impossible, but it isn’t. There is a plausible, legitimate, effective tool at our society’s disposal to empower parents against the risks of social media: We should raise the age requirement for social media use, and give it real teeth.

It might come as a surprise to most Americans that there is an age requirement at all. But the Children’s Online Privacy Protection Act, enacted in 1998, prohibits American companies from collecting personal information from children under 13 without parental consent, or to collect more personal information than they need to operate a service aimed at children under 13. As a practical matter, this means kids under 13 can’t have social media accounts — since the business models of the platforms all depend on collecting personal data. Technically, the major social media companies require users to be older than 12.

But that rule is routinely ignored. Almost 40 percent of American children ages 8 to 12 use social media, according to a recent survey by Common Sense Media. The platforms generally have users self-certify that they are old enough, and they have no incentive to make it hard to lie. On the contrary, as a 2020 internal Facebook memo leaked to The Wall Street Journal made clear, the social media giant is especially eager to attract “tweens,” whom it views as “a valuable but untapped audience.”

Quantifying the dangers involved has been a challenge for researchers, and there are certainly those who say the risks are overstated. But there is evidence that social media exposure poses serious harms for tweens and older kids, too. The platform companies’ own research suggests as much. Internal documents from Facebook — now known as Meta — regarding the use of its Instagram platform by teens point to real concerns. “We make body image issues worse for one in three teen girls,” the researchers noted in one leaked slide. Documents also pointed to potential links between regular social media use and depression, self-harm and, to some extent, even suicide.

TikTok, which is also very popular with tweens and teens, has — alongside other social media platforms — been linked to body image issues as well, and to problems ranging from muscle dysmorphia to a Tourette’s-like syndrome, sexual exploitation and assorted deadly stunts. More old-fashioned problems like bullying, harassment and conspiracism are also often amplified and exacerbated by the platforms’ mediation of the social lives of kids.

Social media has benefits for young people, too. They can find connection and support, discover things and hone their curiosity. In responding to critical reports on its own research, Facebook noted that it found that by some measures, Instagram “helps many teens who are struggling with some of the hardest issues they experience.”

Restrictions on access to the platforms would come with real costs. But, as Jonathan Haidt of New York University has put it, “The preponderance of the evidence now available is disturbing enough to warrant action.” Some teen users of social media see the problem, too. As one of Meta’s leaked slides put it, “Young people are acutely aware that Instagram can be bad for their mental health yet, are compelled to spend time on the app for fear of missing out on cultural and social trends.”

That balance of pressures needs to change. And as the journalist and historian Christine Rosen has noted, preaching “media literacy” and monitoring screen time won’t be enough.

Policymakers can help. By raising the Children’s Online Privacy Protection Act’s minimum age from 13 to 18 (with an option for parents to verifiably approve an exemption for their kids as the law already permits), and by providing for effective age verification and meaningful penalties for the platforms, Congress could offer parents a powerful tool to push back against the pressure to use social media.

Reliable age verification is feasible. For instance, as the policy analyst Chris Griswold has proposed, the Social Security Administration (which knows exactly how old you are) “could offer a service through which an American could type his Social Security number into a secure federal website and receive a temporary, anonymized code via email or text,” like the dual authentication methods commonly used by banks and retailers. With that code, the platforms could confirm your age without obtaining any other personal information about you.

Some teens would find ways to cheat, and the age requirement would be porous at the margins. But the draw of the platforms is a function of network effects — everyone wants to be on because everyone else is on. The age requirement only has to be passably effective to be transformative — as the age requirement takes hold, it would also be less true that everyone else is on.

Real age verification would also make it possible to more effectively restrict access to online pornography — a vast, dehumanizing scourge that our society has inexplicably decided to pretend it can do nothing about. Here, too, concerns about free speech, whatever their merits, surely don’t apply to children.

It may seem strange to get at the challenge of children’s use of social media through online privacy protections, but that path actually offers some distinct advantages. The Children’s Online Privacy Protection Act already exists as a legal mechanism. Its framework also lets parents opt in for their kids if they choose. It can be a laborious process, but parents who feel strongly that their kids should be on social media could allow it.

This approach would also get at a core problem with the social media platforms. Their business model — in which users’ personal information and attention are the essence of the product that the companies sell to advertisers — is key to why the platforms are designed in ways that encourage addiction, aggression, bullying, conspiracies and other antisocial behaviors. If the companies want to create a version of social media geared to children, they will need to design platforms that don’t monetize user data and engagement in that way — and so don’t involve those incentives — and then let parents see what they think.

Empowering parents is really the key to this approach. It was a mistake to let kids and teens onto the platforms in the first place. But we are not powerless to correct that mistake.

Yuval Levin, a contributing Opinion writer, is the editor of National Affairs and the director of social, cultural and constitutional studies at the American Enterprise Institute. He is the author of “A Time to Build: From Family and Community to Congress and the Campus, How Recommitting to Our Institutions Can Revive the American Dream.”

The Times is committed to publishing a diversity of letters to the editor. We’d like to hear what you think about this or any of our articles. Here are some tips. And here’s our email: [email protected].

Follow The New York Times Opinion section on Facebook, Twitter (@NYTopinion) and Instagram.